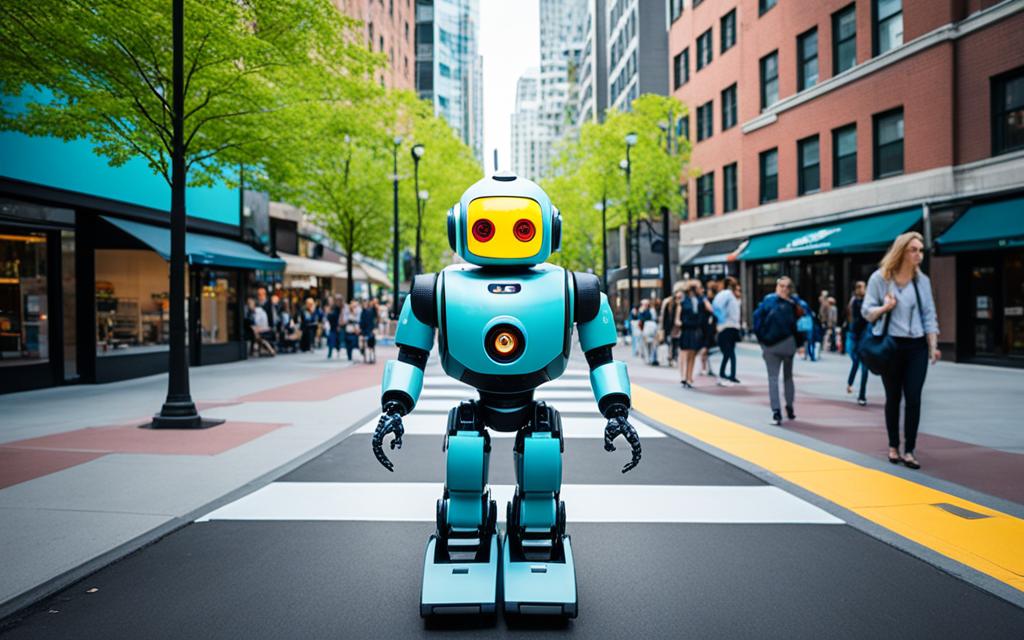

Did you know robots can now see the world almost as well as humans do? Thanks to fast progress in computer vision and machine learning, robots have gotten really good at seeing things. In fact, the market for these technologies is set to hit $17.4 billion by 2025. This shows how important robots’ visual skills are becoming.

I’m an engineer who loves where technology meets nature. I’m always blown away by how robots can see and interact with their world. They use special sensors, smart algorithms, and machine learning to see things in ways humans used to have a monopoly on. This lets them recognize objects and understand scenes in a unique way.

In this article, we’ll dive into how robotic vision systems work. We’ll look at the tech, algorithms, and uses that are changing how we see machines perceive the world. You’ll learn how robots can see more than just what we can, use many “eyes” for better vision, and even read human expressions and gestures. I’ll share cool facts and challenges in robotic vision to inspire you to see the world from a robot’s point of view.

Key Takeaways

- Robots can now see the world with a level of visual perception that rivals or even surpasses human vision.

- Advancements in computer vision, machine learning, and sensor technologies have enabled robots to develop sophisticated “eyes” that can analyze their surroundings in incredible detail.

- Robotic vision systems can perceive the world in ways that were once the exclusive domain of humans and animals, such as object recognition and scene understanding.

- Robots can see beyond the visible light spectrum and combine multiple “eyes” for enhanced perception, offering a unique perspective on the world around us.

- Robotic vision is transforming the way we think about machine perception and is finding applications in various fields, from self-driving vehicles to industrial automation.

Introduction to Robotic Vision

Robots need to see and understand what they see, just like humans do. Robotic vision is key in modern robotics. It uses sensors and algorithms for robots to “see” their world.

Robots and Human-like Perception

Robots have visual sensors like cameras and depth sensors. These capture images and videos of their world. Then, the robot’s software analyzes this data, letting it see like a human.

The Importance of Visual Perception for Robots

Robotic vision is vital for many tasks. It helps robots move safely, avoid things, and work with objects and people. It’s a big part of a robot’s smarts and flexibility.

Robotic vision lets machines see the world like humans do. This opens up many possibilities for their use in different areas.

Visual Sensors: The “Eyes” of Robots

Robotic visual sensors are key for machines to see and understand their world. They use cameras, depth sensors, and LiDAR to capture and make sense of visual data. This helps robots move, spot objects, and interact with their environment.

Camera Technologies for Robots

Cameras are the basic eyes of robots. They come in many types, from simple to advanced like stereo and PTZ cameras. These cameras let robots see in 2D and do things like detect objects, track movement, and read facial expressions.

Advanced Visual Sensors: Depth Sensors and LiDAR

Robots also use advanced sensors for better vision. Depth sensors measure how far away objects are, giving robots 3D info for avoiding obstacles and mapping scenes. LiDAR uses lasers to make a detailed 3D map of the world, helping robots understand their space with great accuracy.

By combining these sensors, robots get a full picture of their world. This sensor fusion helps them make smart choices and move safely and effectively.

| Visual Sensor | Key Features | Applications |

|---|---|---|

| Camera |

|

|

| Depth Sensor |

|

|

| LiDAR |

|

|

Computer Vision Algorithms: Making Sense of Visual Data

Robots use many sensors, but their eyes are key to understanding the world. They capture visual data, then use advanced algorithms to make sense of it. These algorithms analyze images and video to find important information.

Feature Extraction and Object Recognition

At the heart of robotic vision is feature extraction. It’s about spotting important parts in the data, like edges and shapes. These help in object recognition, where robots learn to identify objects.

Computer vision algorithms use these features to spot a variety of objects. This is key for tasks like managing warehouses, checking assembly lines, and navigating on their own.

Machine Learning for Visual Perception

Machine learning is what makes robotic vision powerful. Deep neural networks are used to train these algorithms. They learn from huge amounts of labeled data, improving their ability to process visual information.

As robots see more, they get better at recognizing objects and understanding scenes. They can even predict dangers. This helps them move safely in complex places and work well with their environment.

Object Detection and Recognition in Robotic Vision

A key aspect of robotic perception is the ability to detect and recognize objects in the environment. Through advanced computer vision algorithms, robots can identify specific objects by their visual traits like shape, color, or texture. This lets them interact with objects, move safely, and do specific tasks in various settings.

Robots can make smart choices and act accordingly by accurately detecting and recognizing objects. This skill is vital for many uses, from industrial automation to personal assistants. It helps them know what household items are and spot dangerous objects, making robots safer and more effective.

With object detection and object recognition, robots see their world like humans do. They can move through complex spaces, handle objects with accuracy, and work with humans more naturally. As robotic vision grows, we’ll see more computer vision tech. This will open up new possibilities for scene understanding and visual perception.

Scene Understanding and Environment Mapping

Robots need to understand their surroundings to move and work well. They use cameras and other sensors to get a full picture of their environment. This helps them know where they are and what’s around them.

Combining Visual Data with Other Sensor Inputs

Robots get a better view of the world by using visual perception and other sensors. They combine data from cameras, GPS, and more to make a detailed map of their space. This sensor fusion helps them see better, spot dangers, and plan their moves.

Autonomous Navigation and Obstacle Avoidance

Robots can move on their own with a clear view of their world. They avoid obstacles and find the best way to get where they need to go. Obstacle avoidance tools, using computer vision and sensors, help them dodge dangers safely and efficiently.

| Sensor Modality | Contribution to Scene Understanding |

|---|---|

| Camera | Provides visual information for object recognition and environment mapping |

| Depth Sensor | Captures spatial data to determine the distance and depth of objects |

| LiDAR | Generates a high-resolution 3D map of the environment using laser scanning |

| GPS | Provides global positioning data for localization and navigation |

| Inertial Measurement Unit (IMU) | Measures the robot’s orientation, acceleration, and angular velocity |

Seeing the world through the “eyes” of a robot

Robots, like humans, use their “eyes” to see and understand the world. But their eyes are much more advanced. They can see things we can’t, like beyond what we can see with our eyes. By giving robots special sensors, we help them see and understand the world in new ways.

Perceiving Beyond the Visible Light Spectrum

Humans see mainly with visible light, but robots can see infrared and ultraviolet too. This lets them see heat and things we can’t see. For instance, infrared helps them see in the dark or find heat sources. Ultraviolet helps them spot certain materials or chemicals.

Multiple “Eyes” for Enhanced Perception

Robots don’t just use one camera; they have multiple sensors for a better view. These include depth sensors and LiDAR systems. Depth sensors measure distance, and LiDAR creates 3D maps with laser pulses. Together, these systems help robots understand space, avoid obstacles, and move better.

| Sensor Type | Capability |

|---|---|

| Infrared | Detect heat signatures and low-light conditions |

| Ultraviolet | Identify certain materials and detect specific chemicals |

| Depth Sensors | Measure distance to objects and create 3D maps |

| LiDAR | Use laser pulses to generate detailed 3D environment models |

Facial Recognition and Gesture Analysis

The world of robotics is growing fast, making it key to recognize human faces and understand gestures. Thanks to computer vision and machine learning, robots can now spot and identify faces. They can also read human expressions and body language.

This skill helps robots get what people are feeling and thinking. By noticing faces and gestures, robots can change how they act. This makes their help more tailored and their interactions smoother.

Improving Human-Robot Interaction

Adding facial recognition and gesture analysis to robots has changed how humans and robots talk. Robots now react more naturally to what humans do. This makes talking to them feel more like talking to another person.

For instance, a smart home robot can say hello by name when it sees the owner. It can also adjust the lights and temperature to fit their likes. In a hospital, a robot can see a patient’s gestures to figure out what they need.

Personalized Assistance through Visual Cues

Recognizing faces and gestures lets robots give help that’s just right for each person. They can learn what each user likes and needs. This makes their help more suited to each person’s life.

This is really useful in places like shops, schools, and homes for the elderly. Robots use visual cues to make their help more engaging and focused on the user.

The growth of robotic perception means facial recognition and gesture analysis will be key to better human-robot chats. They’re making interactions with robots more natural and personal.

Adaptability and Learning in Robotic Vision

Modern robotic vision systems are amazing because they get better over time. They use machine learning to improve their vision. This lets them recognize more things and understand better as they go.

They can adapt to new places and learn to do new tasks. This makes them better at seeing and doing things.

The ability to adapt and learn is key for robots to work well in many places. It helps them deal with surprises and get better at what they do. This makes them more reliable in their tasks.

Training Algorithms with Large Datasets

Big steps in machine learning have changed how robots see and interact with the world. By using lots of different data, robots get better at seeing things. They can spot objects, recognize patterns, and understand complex scenes.

Adapting to New Environments and Tasks

Robotic vision systems can easily switch to new places and tasks. They can move through new areas, find new objects, or learn new skills. They keep getting better at seeing and doing things as they go.

| Adaptability Feature | Benefit |

|---|---|

| Continuous Learning | Enables robots to enhance their visual recognition and understanding over time |

| Flexibility in New Environments | Allows robots to adapt to diverse settings and handle unexpected situations |

| Task Adaptation | Empowers robots to learn and perform specialized functions based on visual cues |

Learning and adapting are key for modern robotic vision systems. They help robots work well in many places and tasks. By getting better at seeing, these systems are set to play a big role in the future of automation and working with humans.

Challenges and Future Developments

Robotic vision systems are getting better, but there are still big challenges to overcome. Two main areas need work: making them faster and more efficient, and improving how they make decisions quickly.

Computational Power and Efficiency

Robotic vision needs a lot of computing power to understand complex images and make smart choices. It’s important to make these systems use less energy and be smaller, like in mobile robots or wearable tech. New chip designs, faster processing, and smart algorithms will help solve these problems.

Real-Time Processing and Decision-Making

Some robotic vision tasks, like self-driving cars or keeping things safe, need to work fast. Making these systems quick and accurate is hard. It requires new computer vision tech, machine learning, and efficient data handling. Researchers are always finding ways to make these systems faster and more reliable.

Looking ahead, better computing power, energy use, and quick decision-making will lead to more advanced robotic vision. This will open up new uses in things like self-driving cars, drones, and robots that help people at home.

Applications of Robotic Vision

Robotic vision systems are used in many areas, like self-driving cars, drones, and industrial automation. They help robots see and interact with the world in new ways. This is changing many industries.

Self-Driving Vehicles and Drones

In self-driving cars and drones, robotic vision is key. Advanced computer vision and sensor fusion help these systems navigate safely. They can detect obstacles and make smart choices.

Thanks to computer vision and robotic perception, self-driving cars and drones move safely and efficiently. This technology is making transportation better.

Industrial Automation and Inspection

Robotic vision is also changing how we automate and inspect in industries. It helps with manufacturing, logistics, and testing without damage. These systems can spot defects and measure things accurately.

This technology is making things more efficient and improving quality. As robotic vision technology gets better, we’ll see more new uses. It’s changing the future of many fields, from cars to smart factories.

Conclusion

Exploring robotic vision has shown me how far we’ve come in computer vision and machine perception. These advancements are changing our lives in big ways. Robots now understand their world better, thanks to visual sensors, advanced algorithms, and machine learning.

Looking ahead, the future of robotic vision is bright. I see technology getting better, leading to new things robots can do. They will become a big part of our daily lives, making tasks easier and more efficient.

The future of robotic vision is full of possibilities. By using computer vision and machine perception, we can improve areas like self-driving cars, factories, and help for people with disabilities. As we keep moving forward, robots will change how we see and interact with the world.